Transformer Models in Image Processing

Contents

Transformer models in image processing have significantly transformed the landscape of computer vision. Traditionally, Convolutional Neural Networks (CNNs) dominated image analysis tasks due to their efficiency in capturing spatial hierarchies. However, with the rise of transformer models, the approach to image processing has evolved dramatically. Transformers, initially designed for Natural Language Processing (NLP), have proven their potential in visual tasks by leveraging self-attention mechanisms and parallel processing capabilities.

As a result, transformer models in image processing offer greater flexibility, improved accuracy, and the ability to handle complex visual data more efficiently than their predecessors. This article explores how these models work, their applications, advantages, challenges, and future trends. By the end, you’ll understand why transformers are reshaping the field of computer vision and pushing the boundaries of innovation.

How Transformer Models Work in Image Processing

Transformer models in image processing rely on a unique architecture that sets them apart from traditional methods like Convolutional Neural Networks (CNNs). Their strength lies in the self-attention mechanism, which enables them to capture long-range dependencies and complex patterns in visual data. To understand their impact, let’s explore their evolution and working principles.

The Evolution from CNNs to Transformers

For years, CNNs were the standard for image processing tasks. They excel at detecting local features through convolutional layers, making them effective for image classification and object detection. However, CNNs struggle with capturing global relationships in images, especially in large and complex datasets.

In contrast, transformer models in image processing use attention mechanisms that allow them to consider every part of an image simultaneously. This parallel processing capability reduces the risk of losing critical information and ensures better context understanding across an entire image.

Self-Attention Mechanism

The core of transformer models lies in the self-attention mechanism. It determines the importance of different parts of an image relative to each other, helping the model focus on essential regions without losing the broader context.

- Query, Key, and Value Vectors: Each image patch is transformed into three vectors — Query (Q), Key (K), and Value (V).

- Attention Scores: The Query vector of one patch is compared to the Key vectors of all other patches to calculate attention scores.

- Weighted Sum: These scores determine how much attention each part of the image should receive, creating a weighted sum of the Value vectors.

This process ensures that transformer models in image processing maintain global awareness, enhancing their ability to capture intricate relationships between image elements.

Multi-Head Attention in Image Processing

Multi-head attention takes self-attention a step further. By using multiple sets of Query, Key, and Value vectors, transformers can focus on different aspects of an image simultaneously. This improves their ability to capture diverse features, leading to more accurate and comprehensive image analysis.

In the context of image processing, multi-head attention allows transformers to recognize shapes, textures, and colors more effectively than traditional methods. As a result, they outperform CNNs in tasks requiring detailed feature extraction and contextual understanding.

Applications of Transformer Models in Image Processing

Transformer models in image processing have revolutionized computer vision by offering advanced capabilities across a wide range of tasks. Their ability to capture long-range dependencies and process visual data with high accuracy has made them indispensable in many applications. Let’s explore some of the most significant use cases:

1. Image Classification

One of the primary applications of transformer models in image processing is image classification. Unlike CNNs, transformers analyze the entire image simultaneously, leading to better context understanding and feature recognition. Models like Vision Transformers (ViTs) have achieved state-of-the-art performance in classifying images across diverse datasets.

By dividing an image into fixed-size patches and applying self-attention mechanisms, transformers can recognize patterns and categorize images more effectively. This approach reduces the risk of missing critical details and enhances classification accuracy.

2. Object Detection

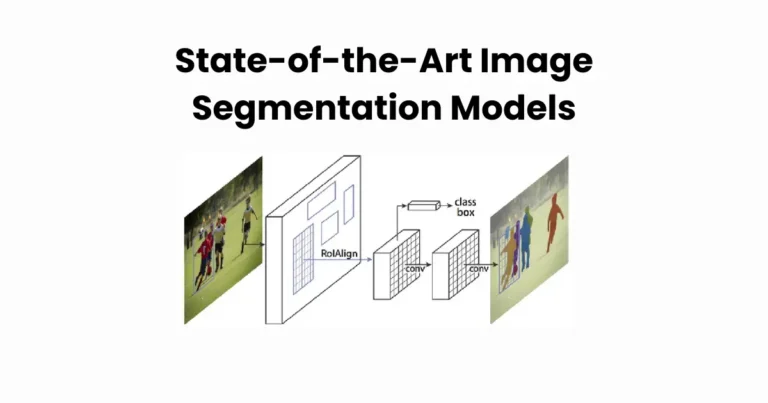

Object detection involves identifying and localizing objects within an image — a task where transformers excel. Traditional methods often rely on region-based approaches, which can be computationally intensive and less efficient.

Transformer models in image processing simplify this by using attention mechanisms to detect multiple objects in parallel. Models like the Detection Transformer (DETR) have demonstrated remarkable efficiency by eliminating the need for complex post-processing steps, such as non-maximum suppression. As a result, they provide faster and more accurate object detection.

3. Image Segmentation

Image segmentation involves dividing an image into distinct regions based on specific features. Transformers bring precision to this task by analyzing the relationships between image pixels and grouping similar elements.

With models like Segmenter and Vision Transformer-based segmentation networks, transformer models in image processing offer superior accuracy in identifying object boundaries and distinguishing between different areas of an image. This makes them ideal for applications in medical imaging, autonomous driving, and satellite image analysis.

4. Super-Resolution and Image Generation

Transformers have also made significant strides in enhancing image quality and creating realistic visuals. In super-resolution tasks, they upscale low-resolution images by predicting high-frequency details, resulting in clearer and more detailed outputs.

For image generation, transformer models in image processing use techniques like Generative Adversarial Networks (GANs) and Diffusion Models, where attention mechanisms help produce high-quality, realistic images. This application is particularly valuable in creative industries like game design, animation, and digital art.

In short, the versatility of transformer models has expanded the possibilities of image processing, enabling breakthroughs across various domains and setting new performance benchmarks.

Advantages of Transformer Models in Image Processing

Transformer models in image processing have gained widespread popularity due to their unique architecture and powerful performance. They offer several advantages over traditional methods like Convolutional Neural Networks (CNNs), making them highly effective for complex visual tasks. Let’s explore the key benefits:

1. Enhanced Feature Extraction

One of the most significant advantages of transformer models in image processing is their ability to capture intricate and detailed features. Unlike CNNs, which focus on local patterns through convolutional layers, transformers use self-attention mechanisms to consider every part of an image simultaneously.

This global perspective allows transformers to recognize subtle relationships and long-range dependencies between different elements in an image. As a result, they extract richer and more meaningful features, leading to improved performance in tasks like classification and segmentation.

2. Better Long-Range Dependencies

Traditional models often struggle with understanding the relationships between distant parts of an image. Transformer models in image processing overcome this limitation by using self-attention, which evaluates the importance of each image patch in relation to all others.

This capability ensures that transformers maintain context across the entire image, enhancing their ability to capture global patterns and dependencies. It’s particularly beneficial in tasks like object detection, where understanding spatial relationships is crucial.

3. Improved Efficiency

Although transformers require significant computational power during training, their efficiency in handling visual data makes them worth the investment. By processing image patches in parallel, transformer models in image processing reduce the time needed for feature extraction and analysis.

Moreover, techniques like multi-head attention enable them to focus on multiple aspects of an image simultaneously. This parallelism speeds up the learning process and improves model performance without sacrificing accuracy.

4. Greater Flexibility

Transformer models are highly adaptable and can be applied to various image processing tasks, from classification and object detection to super-resolution and image generation. Their architecture supports easy integration with other techniques, making them suitable for diverse applications.

For example, Vision Transformers (ViTs) have been successfully combined with convolutional layers to create hybrid models, leveraging the strengths of both approaches. This flexibility allows developers to fine-tune models for specific use cases, enhancing their overall effectiveness.

5. Scalability

Transformer models in image processing scale well with larger datasets and more complex tasks. As the amount of training data increases, their performance improves significantly, unlike CNNs, which often plateau.

This scalability makes transformers ideal for projects involving high-resolution images and extensive datasets. It also enables them to achieve state-of-the-art results in computer vision benchmarks, setting new standards for image analysis.

In summary, the advantages of transformer models stem from their innovative design and powerful capabilities. Their ability to capture global context, process data efficiently, and adapt to various tasks has made them indispensable in modern image processing.

Challenges and Limitations of Transformer Models in Image Processing

While transformer models in image processing have shown remarkable performance, they also come with certain challenges and limitations. Understanding these drawbacks is essential for evaluating their practicality in different applications. Let’s take a closer look at the key issues:

1. High Computational Costs

One of the most significant limitations of transformer models in image processing is their demand for high computational power. The self-attention mechanism, which is central to their architecture, requires quadratic complexity in relation to the input size.

When processing high-resolution images, this results in a substantial increase in memory usage and computation time. As a result, training and deploying transformer models often require advanced hardware, like GPUs or TPUs, making them less accessible for smaller projects.

2. Large Dataset Requirements

Transformer models excel when trained on vast amounts of data. Their performance improves with larger datasets, which helps them capture intricate patterns and avoid overfitting. However, acquiring and annotating large-scale image datasets can be time-consuming and expensive.

For smaller datasets, transformer models in image processing may struggle with generalization, leading to suboptimal results compared to traditional methods like CNNs. Data augmentation techniques can help mitigate this issue, but they don’t completely resolve it.

3. Longer Training Times

Due to their complex architecture and high parameter count, transformer models often require longer training times. The self-attention mechanism, while powerful, involves multiple calculations for each image patch, slowing down the learning process.

Even with advanced hardware, training a vision transformer can take days or weeks, depending on the dataset size and model configuration. This extended training period increases development costs and makes rapid experimentation more challenging.

4. Interpretability Issues

The interpretability of transformer models in image processing remains a significant challenge. Unlike CNNs, which offer a more intuitive understanding of feature maps and convolutional filters, transformers use attention weights that can be harder to visualize and analyze.

This lack of transparency makes it difficult to pinpoint why a model made a particular decision. In critical applications like medical imaging or autonomous driving, understanding model behavior is essential for ensuring reliability and trust.

5. Risk of Overfitting

With their high capacity and large number of parameters, transformer models are prone to overfitting, especially when working with limited data. If the model memorizes training examples instead of generalizing from them, its performance on unseen images can suffer.

To address this, techniques like dropout, data augmentation, and transfer learning are often used. However, the risk of overfitting remains higher compared to simpler architectures.

In conclusion, while transformer models in image processing offer impressive capabilities, their challenges cannot be overlooked. Balancing their powerful performance with practical constraints requires careful consideration, particularly when dealing with limited resources or smaller datasets.

Future Trends of Transformer Models in Image Processing

As technology continues to evolve, transformer models in image processing are set to play an even more transformative role in the field of computer vision. Their advanced architecture and potential for innovation are paving the way for exciting developments. Let’s explore the key trends shaping their future:

1. Efficient Transformer Architectures

One major focus in the future will be improving the efficiency of transformer models in image processing. Current models often require substantial computational power, but researchers are developing more lightweight and optimized architectures.

Techniques like sparse attention, patch optimization, and hybrid models (combining CNNs and transformers) are expected to reduce the complexity while maintaining high performance. This will make transformers more accessible for real-time applications and devices with limited processing capacity.

2. Enhanced Interpretability

As transformers become more widely adopted, the need for transparent and interpretable models grows. Future research will likely focus on developing methods to visualize attention maps and understand the decision-making process of these models.

By improving interpretability, transformer models in image processing will become more reliable, especially in critical fields like healthcare and autonomous systems, where understanding model behavior is essential.

3. Multimodal Integration

Transformers are well-suited for handling multiple data types, and future applications will likely leverage this capability. Combining image data with text, audio, or video inputs can create more comprehensive and intelligent models.

For instance, models that integrate natural language processing with image recognition can enhance applications like image captioning, content creation, and visual question answering. This multimodal approach will push the boundaries of what’s possible in image processing.

4. Transfer Learning and Pretrained Models

The popularity of pretrained models like Vision Transformers (ViTs) has already demonstrated the effectiveness of transfer learning. Moving forward, more robust and versatile pretrained transformer models in image processing will become available.

These models will be fine-tuned for specific tasks with minimal data, reducing the need for large datasets and extensive training. This will democratize access to high-performance image processing for smaller companies and independent developers.

5. Real-Time Applications

With improvements in efficiency and scalability, transformers are poised to play a larger role in real-time image processing. Applications like augmented reality (AR), virtual reality (VR), and real-time video analysis will benefit from the speed and accuracy of transformer models.

As hardware capabilities continue to advance, the deployment of transformer models in image processing on mobile devices and edge computing platforms will become more practical. This will open up opportunities for interactive and dynamic visual applications.

In short, the future of transformers in image processing is filled with promise. Through enhanced efficiency, greater interpretability, and innovative applications, these models will continue to shape the evolution of computer vision and redefine what’s possible in the digital world.

Conclusion

Transformer models in image processing have revolutionized the field of computer vision with their powerful architecture and ability to capture long-range dependencies and complex patterns. Their global attention mechanism and scalability make them superior to traditional methods like CNNs for many visual tasks. From enhanced feature extraction to real-time applications, transformers offer immense potential.

Despite their advantages, challenges like high computational costs, large data requirements, and interpretability issues cannot be ignored. However, ongoing research and technological advancements are addressing these limitations, paving the way for more efficient and accessible models.

As we look ahead, transformer models in image processing will continue to evolve, driving innovation through efficient architectures, multimodal integration, and real-time capabilities. Their transformative impact on industries like healthcare, gaming, and autonomous systems is just beginning — making transformers an essential tool in the future of computer vision.